† Corresponding author. E-mail:

Project supported by the National Natural Science Foundation of China (Grant No. 11375005) and partially by 20150964-SIP-IPN, Mexico.

Calculations of the quantum information entropy have been extended to a non-analytically solvable situation. Specifically, we have investigated the information entropy for a one-dimensional system with a schematic “Landau” potential in a numerical way. Particularly, it is found that the phase transitional behavior of the system can be well expressed by the evolution of quantum information entropy. The calculated results also indicate that the position entropy Sx and the momentum entropy Sp at the critical point of phase transition may vary with the mass parameter M but their sum remains as a constant independent of M for a given excited state. In addition, the entropy uncertainty relation is proven to be robust during the whole process of the phase transition.

Recently, there has been a growing interest in dealing with information theoretical measures for quantum-mechanical systems. As an alternative to the Heisenberg uncertainty relation, entropic uncertainty has been particularly examined.[1–3] Among the measures of information entropy, Shannon entropy[4–6] plays a very important role in the measure of uncertainty, which has been tested for various forms of potentials. The entropic uncertainty relation, which is related to the position and momentum spaces, was given by[7–9]

Apart from their intrinsic interest, the entropic uncertainty relations have been used widely in atomic and molecular physics.[10–14] For example, the Shannon information entropies for a few molecular potentials have been analytically obtained, i.e., the harmonic oscillator,[15] the Pöschl–Teller (PT),[16,17] the Morse,[16,18] the Coulomb,[19] the potential isospectral to the PT potential,[20] the classical orthogonal polynomials[21–23] and other studies.[24–26] In addition, investigation of the quantum information entropies was also carried for other analytically solved potentials such as the symmetrically and asymmetrically trigonometric Rosen–Morse,[27,28] the PT-like potential,[29] a squared tangent potential,[30] the position-dependent mass Schröinger equation with the null potential,[31] the hyperbolic potential,[32] the infinite circular well[33] and the Fisher entropy for the position-dependent mass Schrödinger equation,[34,35] due to their applications in physics. Notably, the previous discussions related to shannon entropy were mostly focusing on analytically solvable potentials, but not all the interesting quantum systems have the corresponding analytically solvable modes. Then an efficient numerical method would be particularly important. In this work, we will extend the calculations of shannon entropy to the one-dimensional system with a schematic “Landau” potential,[36,37] which may be formally derived in the mean-field level from the vibron model for molecular structures[38,39] and can be only solved in a numerical way. Particularly, there exists a quantum phase transitional behavior in the system as varying the control parameter in this type of potential.[36,37]

One way of addressing quantum phase transitions[40] is to use the potential energy approach. A schematic “Landau” potential is often considered to indicate quantum phase transition,[36,37] which is written as

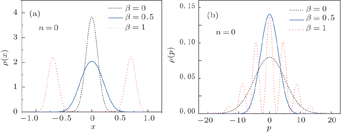

To check the validity of this method, we calculate the probability density distributions of the eigenfunctions in both the position and momentum spaces, which are defined as

Once the probability density functions are obtained, the position and momentum space information entropies for the one-dimensional potential can be calculated by using Eqs. (

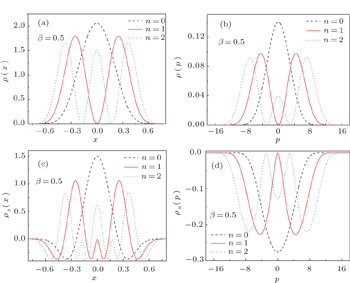

To show the properties of the information entropy densities, we plotted position and momentum entropy densities ρs(x) and ρs(p) together with the probability density functions ρ(x) and ρ(p) in Fig.

By using the information entropy densities, one can calculate the information entropies defined in Eqs. (

| Table 1. Positive and momentum entropies at the critical point for the low-lying states n = 0, 1, 2 with different mass M. . |

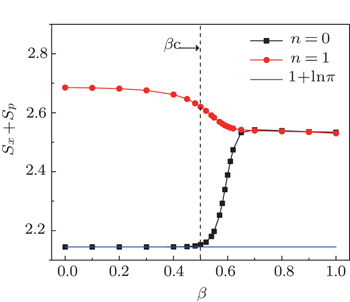

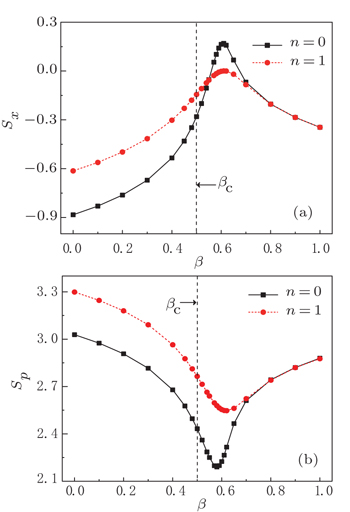

To test the transitional behavior of the information entropy within β ∈ [0, 1], the calculated results of Sx and Sp for n = 0, 1 are shown in Fig.

To further check the entropy uncertainty relation in the whole transitional region with β ∈ [0, 1], the results of Sx + Sp are shown in Fig.

| Fig. 3. The evolutions of position and momentum information entropies, Sx (a) and Sp (b) for n = 0, 1 as a function of the control parameter β, where the mass parameter is set as M = 50. |

In this paper, we have studied information entropy for a non-analytically solvable potential by adopting the diagonalization scheme. Concretely, we have investigated the properties of the position and momentum quantum information entropies of the one-dimensional system with a schematic “Landau” potential, in which the variation of the control parameter β may lead to a second-order quantum phase transition. It was found that the entropy density functions ρs(x) and ρs(p) at the critical point βc may generally give multi-peak distributions that are symmetric with respect to x = 0 or p = 0. It was also found that the results of the position and momentum information entropies Sx and Sp may change with the variations of the mass parameter M or the quantum number n but their sum Sx + Sp for a given n number remains as a constant independent of the mass parameter M. The further calculations show that there would be a rapidly non-monotonic change in both Sx and Sp around the critical point βc, which justifies that the quantum phase transition can indeed be characterized by the evolution of the quantum information entropies. Finally, the results indicate that the entropic uncertainty relation given in Eq. (

| 1 | |

| 2 | |

| 3 | |

| 4 | |

| 5 | |

| 6 | |

| 7 | |

| 8 | |

| 9 | |

| 10 | |

| 11 | |

| 12 | |

| 13 | |

| 14 | |

| 15 | |

| 16 | |

| 17 | |

| 18 | |

| 19 | |

| 20 | |

| 21 | |

| 22 | |

| 23 | |

| 24 | |

| 25 | |

| 26 | |

| 27 | |

| 28 | |

| 29 | |

| 30 | |

| 31 | |

| 32 | |

| 33 | |

| 34 | |

| 35 | |

| 36 | |

| 37 | |

| 38 | |

| 39 | |

| 40 | |

| 41 |